There are different types of digitized images.

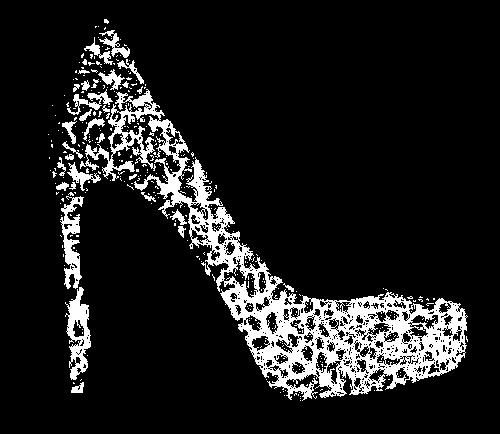

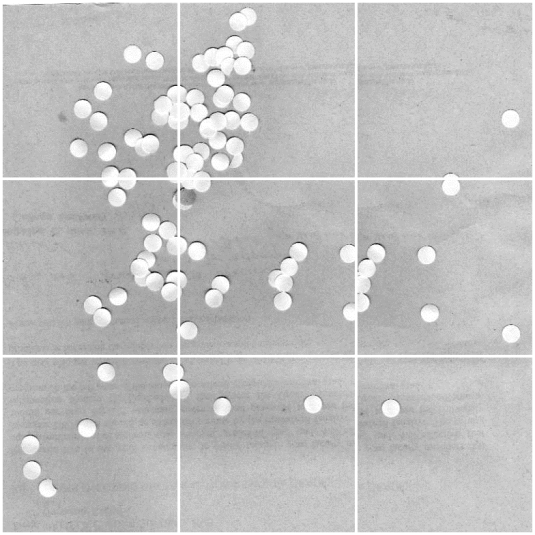

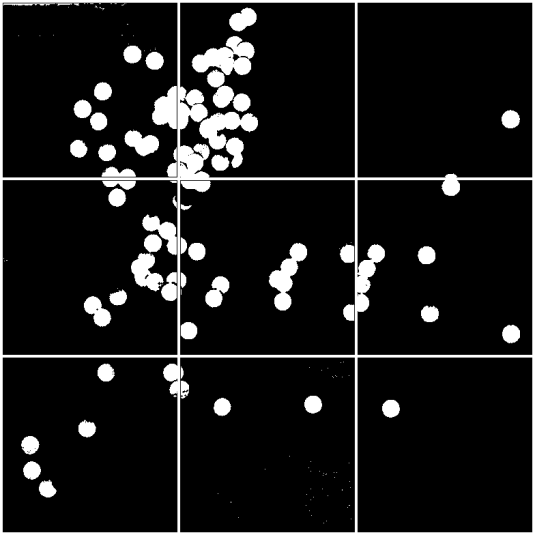

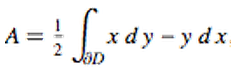

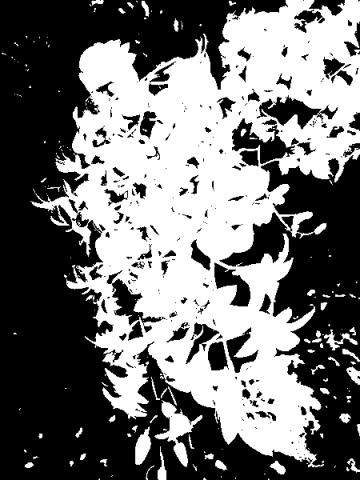

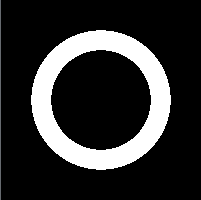

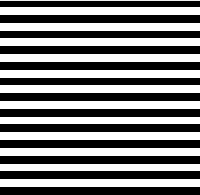

Binary Images

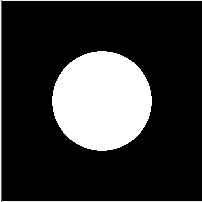

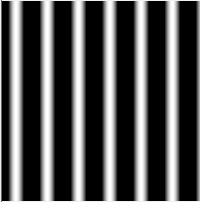

A binary image is typically known as the black and/or white image. This is because each pixel can contains one of two possible values only. Each pixel can store a single bit only which is either 1 or 0. One color serve as the foreground color while the other as background color. In the document scanning industry this is often referred to as bi-tonal.

This type of image is usually used because it is easy to process. Though the downside of this format is the lack of image information it contains. Nevertheless, this format proves useful in many processes such as determining object orientation and sorting objects as it travels in a conveyor belt (e.g. pharmaceutical pills).

image info generated through imfinfo():

Filename: C:\Users\user\Documents\AP186\activity 3\binary_img.jpg

FileSize: 58783.

Width: 582.

Height: 585.

BitDepth: 8.

ColorType: grayscale

A binary image is usually stored in memory as a bitmap, a packed array of bits. A 640×480 image requires 37.5 KiB of storage. Because of the small size of the image files, fax machines and document management solutions usually use this format. Example of this image are text, signatures, and line art, the example shown above. The example image was obtained from the internet. Initially its color type is truecolor, so I converted it to black and white and it worked. Though in displaying the colortype in imfinfo(), only grayscale and truecolor are available.

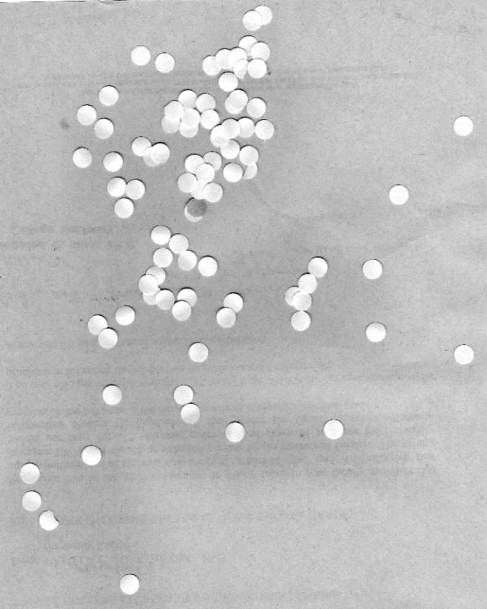

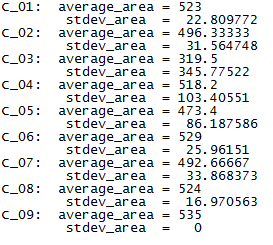

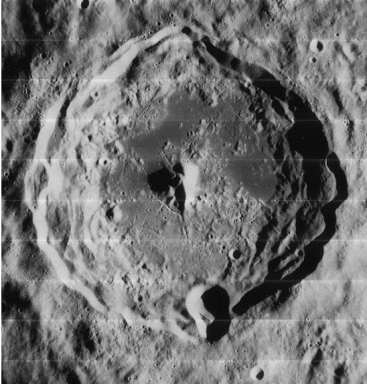

Grayscale images

(Grayscale desc: A grayscale image, as the name implies, is an image that has its color in the scale of gray levels only. The value of each of its pixel contains only intensity information. The gray levels are results of different intensities of black to white or vice versa. This type of image is also pertained as monochromatic. The intensity is expressed as a range of fractional values from 0 to 1 which is total black to total white respectively.

image info generated through imfinfo():

Filename: C:\Users\user\Documents\AP186\activity 3\grayscale_img.jpg

FileSize: 14481.

Width: 332.

Height: 300.

BitDepth: 8.

ColorType: grayscale

File formats such as PNG and TIFF supports 16-bit grayscale images. One technical application of this type of image is on medical imaging.

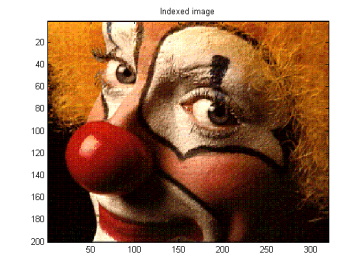

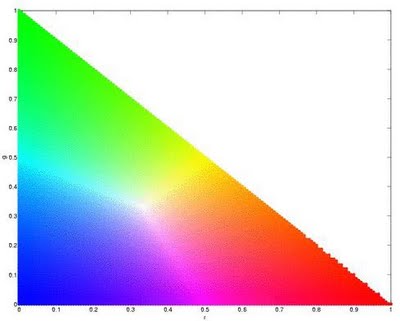

Truecolor images

A True color image is the most common type of image. It supports the three RGB colors which stands for Red, Green and Blue. These three color channels are then combined to create different colors, hues and shade as shown in an image. A truecolor usually has at the least 256 shades of red, green, and blue. By which combining all makes 16,777,216 color variations and almost all can be distinguished by the human eye.

For each pixel, generally one byte is used for each channel while the fourth byte (if present) is being used either as an alpha channel, data, or simply ignored. Byte order is usually either RGB or BGR. Some systems exist with more than 8 bits per channel, and these are often also referred to as truecolor (for example a 48-bit truecolor scanner).

image info generated through imfinfo():

Filename: C:\Users\user\Documents\AP186\activity 3\trueimage.jpg

FileSize: 265624.

Width: 450.

Height: 600.

BitDepth: 8.

ColorType: truecolor

The sample truecolor image shown above is a Cave in Cagayan captured using a digital camera. Image formats such as JPEG, PNG and many more support this type.

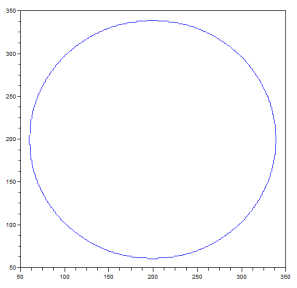

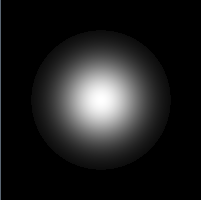

Indexed images

These types of images are made to lessen the memory space occupied on the computer or in memory storage. Instead of the data color being contained directly in the pixel, a separate Color map or Palette is utilized. So there are two data contained in the image: image itself and the palette. The Palette contains an array of colors, each color element having its corresponding index depending on its position in the array. The array contains limited number of color. The usual are 4, 16 and 256. Though this saves memory space it may reduce the color information.

(Small 4- or 16-color palettes are still acceptable for little images or very simple graphics, but to reproduce real life images they become nearly useless.)

image info generated through imfinfo():

Filename: C:\Users\user\Documents\AP186\activity 3\indexed_img.jpg

FileSize: 64993.

Width: 560.

Height: 420.

BitDepth: 8.

ColorType: truecolor

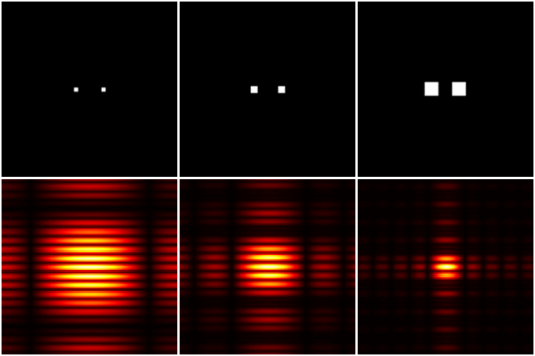

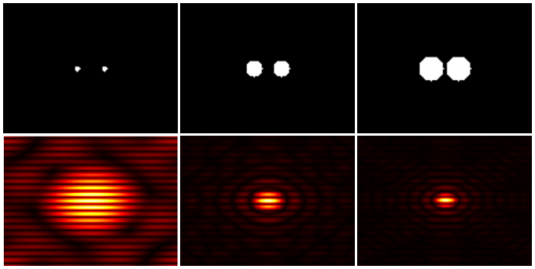

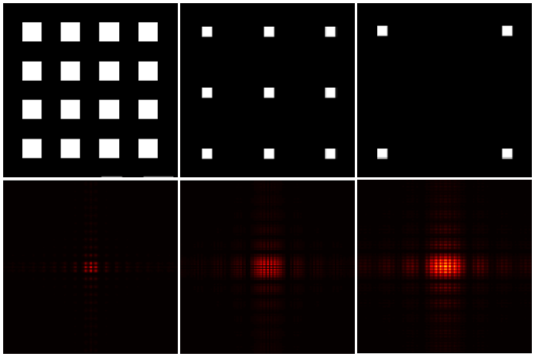

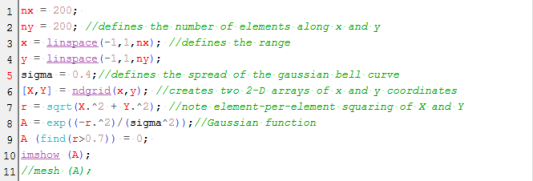

Application of a more complex image processing creates another set of Image types. This new set of types of images also have specific uses.

High Dynamic Range (HDR) images

An HDR image is a result of a digital imaging technique that allows a far greater dynamic range of exposures compared to normal digital imaging techniques. It is usually used in images of scenes, such as fireworks display and shots of the sky, wherein a wide range of intensity levels can be exhibited. This is usually achieved by modifying photos with image processing software for tone-mapping.

Among the image types, I fancy HDR images. I think it’s the impact the image makes due to the intensity of its colors.

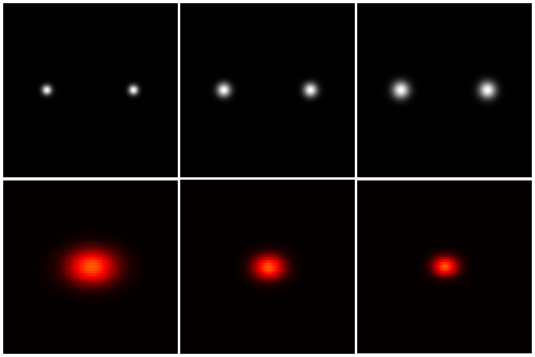

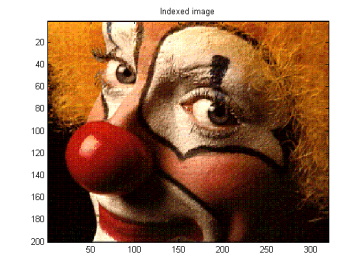

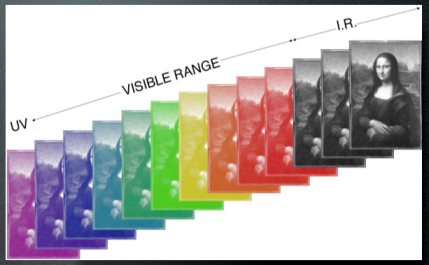

Multispectral/Hyperspectral images

Multispectral imaging deals with several images at discrete and somewhat narrow bands. A multispectral sensor may have many bands covering the spectrum from the visible to the longwave infrared. Multispectral images do not produce the “spectrum” of an object.

On the other hand, Hyperspectral imaging deals with imaging narrow spectral bands over a continuous spectral range, and produce the spectra of all pixels in the scene. So a sensor with only 20 bands can also be hyperspectral when it covers the range from 500 to 700 nm with 20 bands each 10 nm wide.

3-Dimensional (3D) images

a.) A point cloud is a set of vertices in a three-dimensional coordinate system. These vertices are usually defined by X, Y, and Zcoordinates, and typically are intended to be representative of the external surface of an object.

Point clouds are most often created by 3D scanners. These devices measure in an automatic way a large number of points on the surface of an object, and often output a point cloud as a data file. The point cloud represents the set of points that the device has measured.

b.) Cross-eye stereoscopic format is the most popular method for showing 3D on a computer screen, because it does not need any equipment.

Stereo pairs are fused without optical aid in two ways, called X (cross eye) and U (parallel). [X] Big images are fused by going cross-eyed until the two pictures superimpose. Converging the eyes makes them focus close, and it is necessary to wait until the brain adjusts the focus for distant viewing again. Suddenly the pictures fuse as a 3D image. It is possible to look around the picture with the eyes locked into the correct format. [U] Small images are seen the same way as in a 3D viewer, using U or parallel vision. The eyes are relaxed to look into the distance until the images fuse, then refocused by the brain.

c.) (2-dimensional) Image Stacking

Many scanning devices, such as MRI, result in slices through an object, each each 2D slice is normally presented as a grey scale image.

Each pixel in the image corresponds to some characteristic at that point in space, for example protein density in MRI or X-ray absorbtion in CT scans. These images stack together to form a solid representation of the head. The contour approach attempts to trace the outline of the chatacteristic of interest and use these contours to form the surface.

Temporal images/videos

A number of temporal images produces a video. This we see in our daily lives almost all the time. In the sample given below, the concept of Temporal Video is demonstrated. I just thought that this is interesting that’s why I decided to use it as the example. The proponent’s explanation on his idea: ” I remembered slit-scan photography, a method where a slit is moved across the picture plane essentially taking a temporal image, where different times of the scene are captured on different parts of the film.”

If you want to see a more detailed explanation of what the experimenter did, watch the Making of the video here.

Image Formats

In image processing, it is important that one chooses the right format. As technology advances, data storage increases, thus compressing file storage is needed. There are two types of image file compression algorithms: lossless and lossy.

Lossless compression algorithms reduce file size while preserving a perfect copy of the original uncompressed image. Lossless compression generally, but not exclusively, results in larger files than lossy compression. Lossless compression should be used to avoid accumulating stages of re-compression when editing images.

Lossy compression algorithms preserve a representation of the original uncompressed image that may appear to be a perfect copy, but it is not a perfect copy. Oftentimes lossy compression is able to achieve smaller file sizes than lossless compression. Most lossy compression algorithms allow for variable compression that trades image quality for file size.

|

Color data mode -bits per pixel |

| JPG |

RGB – 24-bits (8-bit color),

Grayscale – 8-bits

(only these)JPEG always uses lossy JPG compression, but its degree is selectable, for higher quality and larger files, or lower quality and smaller files. JPG is for photo images, and is the worst possible choice for most graphics or text data. |

| TIF |

Versatile, many formats supported.

Mode: RGB or CMYK or LAB, and others, almost anything.

8 or 16-bits per color channel, called 8 or 16-bit “color” (24 or 48-bit RGB files).

Grayscale – 8 or 16-bits,

Indexed color – 1 to 8-bits,

Line Art (bilevel)- 1-bitFor TIF files, most programs allow either no compression or LZW compression (LZW is lossless, but is less effective for color images). Adobe Photoshop also provides JPG or ZIP compression in TIF files too (but which greatly reduces third party compatibility of TIF files). “Document programs” allow ITCC G3 or G4 compression for 1-bit text (Fax is G3 or G4 TIF files), which is lossless and tremendously effective (small). Many specialized image file types (like camera RAW files) are TIF file format, but using special proprietary data tags. |

Another criteria in choosing the file formats are mainly due to its usage or purpose.

Best file types for these general purposes:

|

Photographic Images |

Graphics, including

Logos or Line art |

| Properties |

Photos are continuous tones, 24-bit color or 8-bit Gray, no text, few lines and edges |

Graphics are often solid colors, with few colors, up to 256 colors, with text or lines and sharp edges |

| For Unquestionable Best Quality |

TIF or PNG (lossless compression

and no JPG artifacts) |

PNG or TIF (lossless compression,

and no JPG artifacts) |

| Smallest File Size |

JPG with a higher Quality factor can be decent. |

TIF LZW or GIF or PNG (graphics/logos without gradients normally permit indexed color of 2 to 16 colors for smallest file size) |

Maximum Compatibility

(PC, Mac, Unix) |

TIF or JPG |

TIF or GIF |

| Worst Choice |

256 color GIF is very limited color, and is a larger file than 24 -bit JPG |

JPG compression adds artifacts, smears text and lines and edges |

Other Image formats are as given below

JPEG 2000

JPEG 2000 is a compression standard enabling both lossless and lossy storage. The compression methods used are different from the ones in standard JFIF/JPEG; they improve quality and compression ratios, but also require more computational power to process. JPEG 2000 also adds features that are missing in JPEG. It is not nearly as common as JPEG, but it is used currently in professional movie editing and distribution (e.g., some digital cinemas use JPEG 2000 for individual movie frames).

Exif

The Exif (Exchangeable image file format) format is a file standard similar to the JFIF format with TIFF extensions; it is incorporated in the JPEG-writing software used in most cameras. Its purpose is to record and to standardize the exchange of images with image metadata between digital cameras and editing and viewing software. The metadata are recorded for individual images and include such things as camera settings, time and date, shutter speed, exposure, image size, compression, name of camera, color information. When images are viewed or edited by image editing software, all of this image information can be displayed. It stores meta informations.

RAW

RAW refers to a family of raw image formats that are options available on some digital cameras. These formats usually use a lossless or nearly-lossless compression, and produce file sizes much smaller than the TIFF formats of full-size processed images from the same cameras. Although there is a standard raw image format, (ISO 12234-2, TIFF/EP), the raw formats used by most cameras are not standardized or documented, and differ among camera manufacturers.

BMP

The BMP file format (Windows bitmap) handles graphics files within the Microsoft Windows OS. Typically, BMP files are uncompressed, hence they are large; the advantage is their simplicity and wide acceptance in Windows programs.

PPM, PGM, PBM, PNM

Netpbm format is a family including the portable pixmap file format (PPM), the portable graymap file format (PGM) and the portable bitmap file format (PBM). These are either pureASCII files or raw binary files with an ASCII header that provide very basic functionality and serve as a lowest-common-denominator for converting pixmap, graymap, or bitmap files between different platforms. Several applications refer to them collectively as PNM format (Portable Any Map).

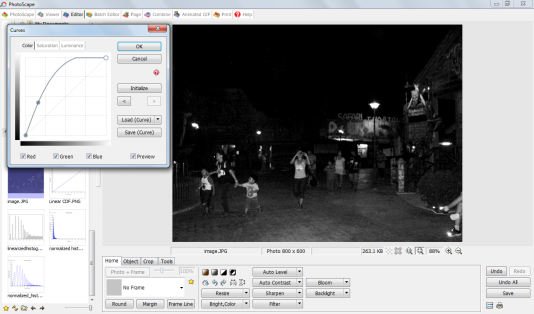

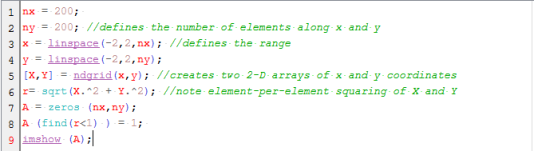

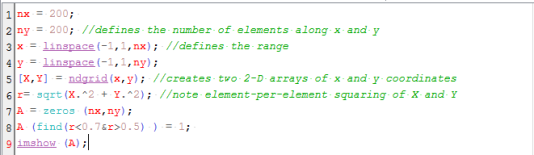

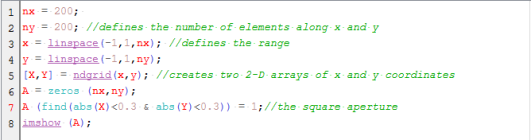

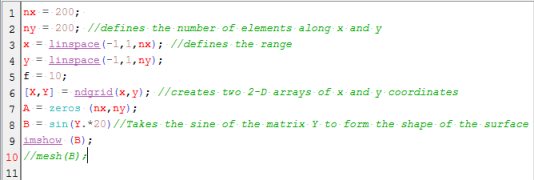

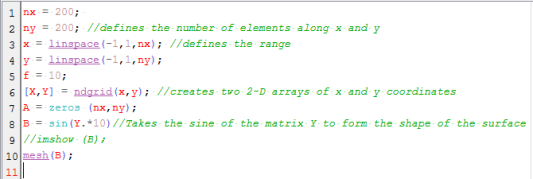

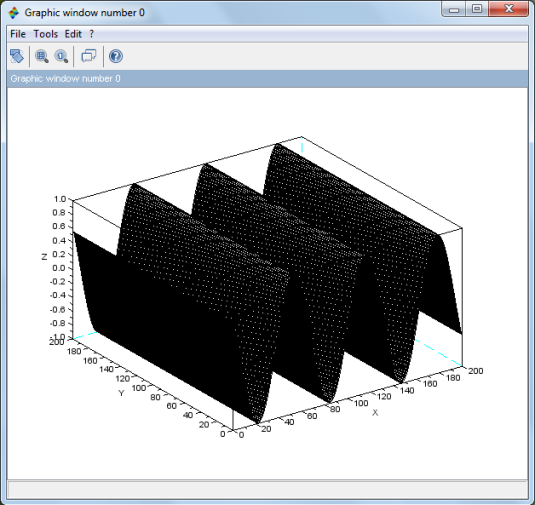

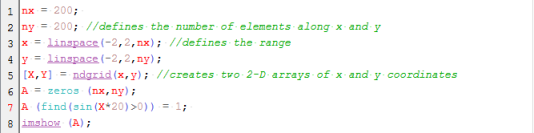

Now, some applications of what I learned in Scilab and the research done in Image file types and formats. 🙂

I used the Scilab and the newer version of SIP toolbox which is the SIVP toolbox.

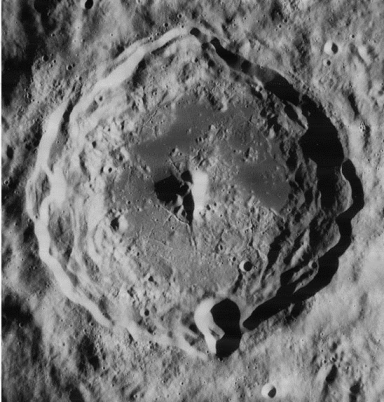

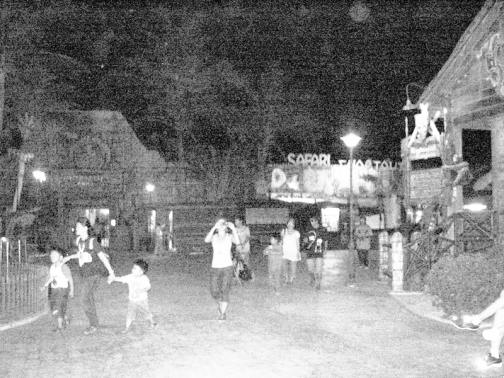

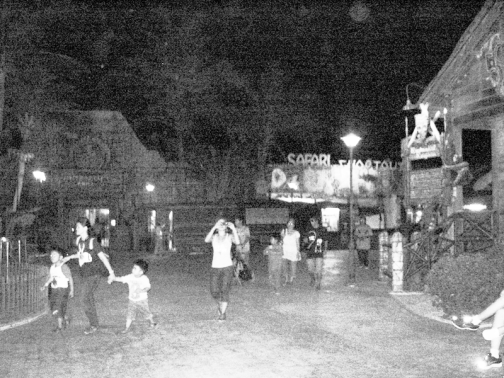

First, I obtained an image, captured using a Canon Digital camera, archived in my computer. This is a truecolor image.

stacksize(10000000);

I = imread('C:\Users\user\Documents\AP186\activity 3\tru_image4.jpg');

size(I)

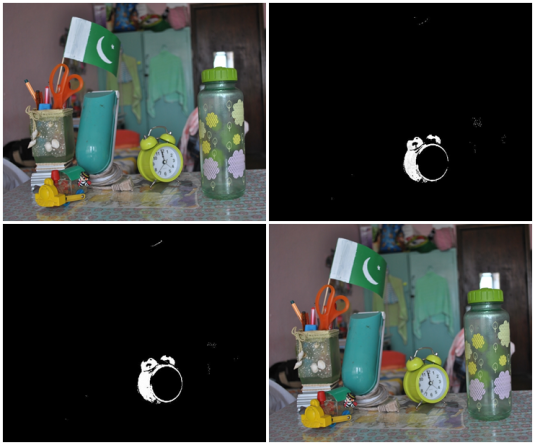

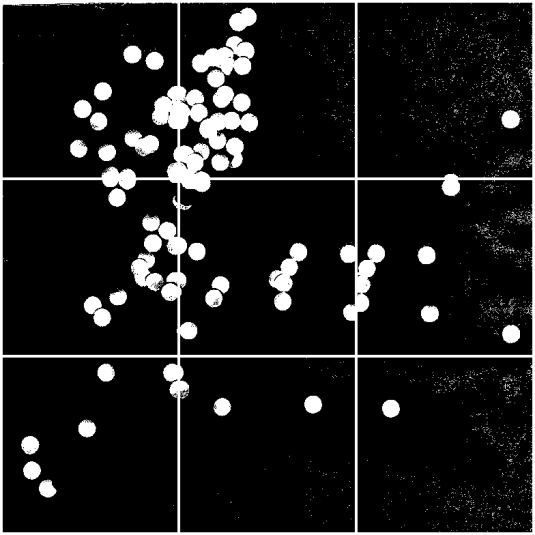

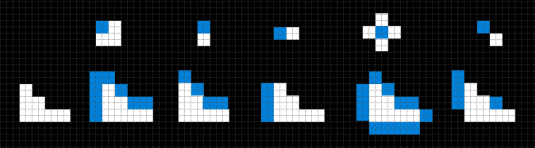

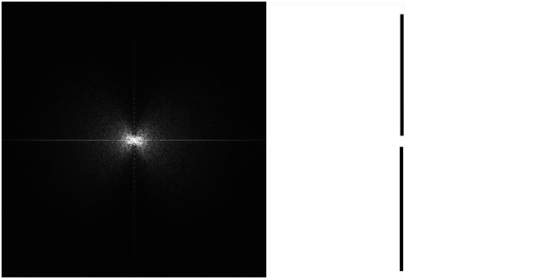

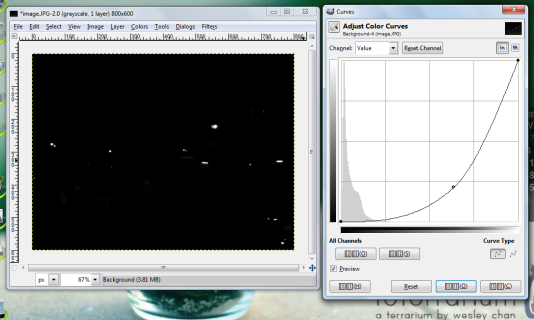

Converting the truecolor image to grayscale is done by using the rgb2gray() function.

I2 = rgb2gray(I);

imshow(I2);

imwrite(I2, 'tru_image4_gray.png')

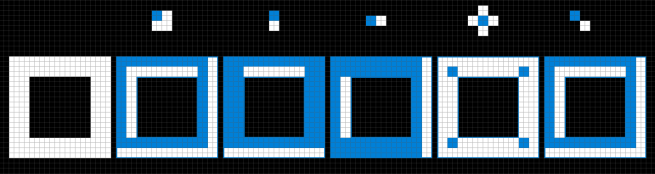

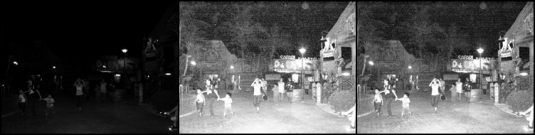

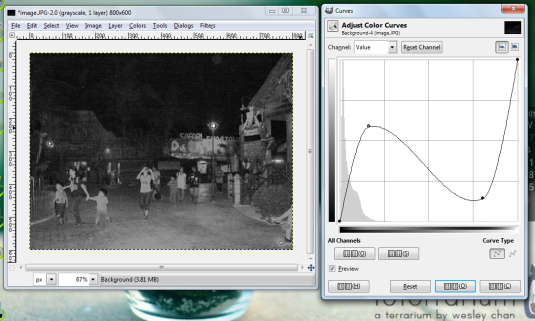

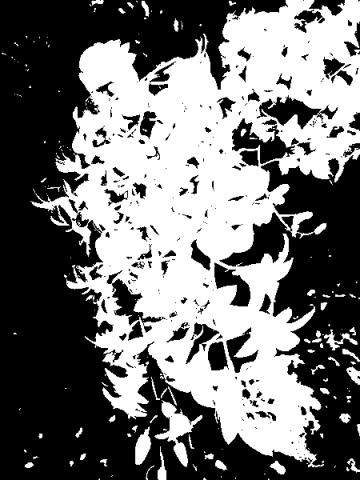

Then converting the truecolor to black and white, the im2bw() function is utilized. But then, aside from the image to be converted, a threshold value is required. The threshold value determines the pixel that qualifies as 0 (white) or1 (black). Basically it sorts out the pixel as black or white. I placed a value that I thought produces the best B&W image.

I3 = im2bw(I,0.3);

imshow(I3);

imwrite(I3,'tru_image4_bw.png')

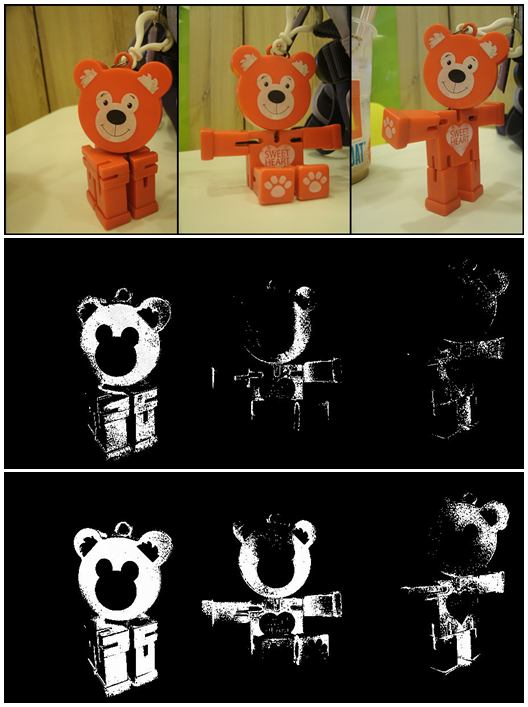

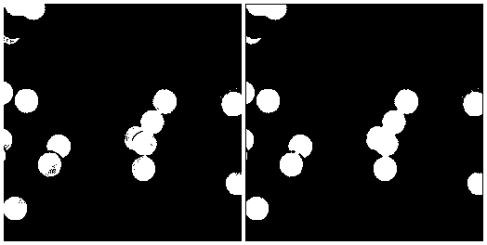

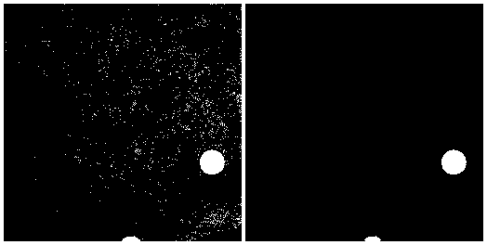

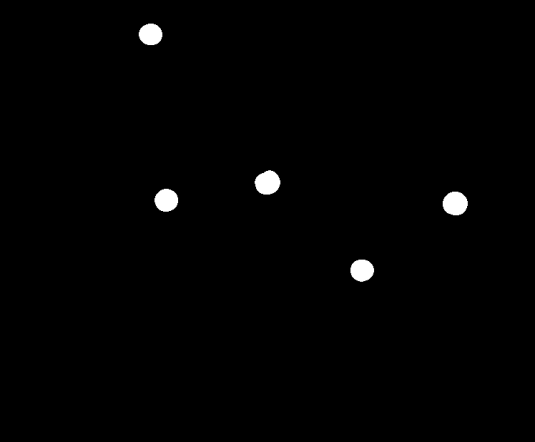

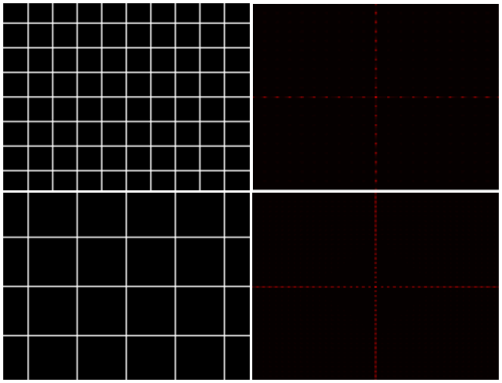

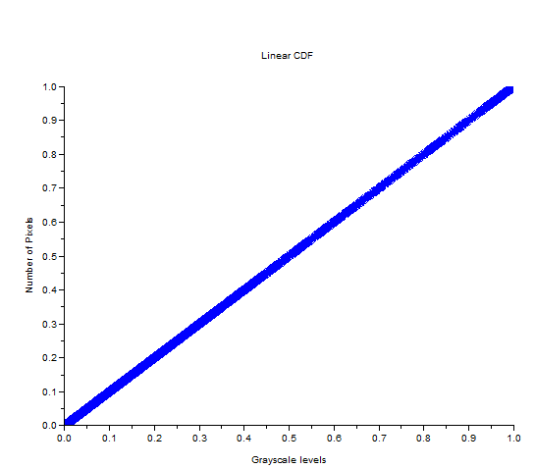

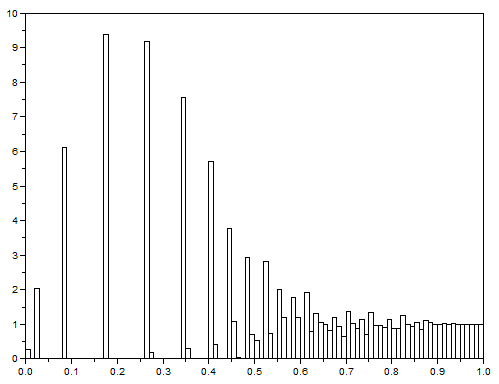

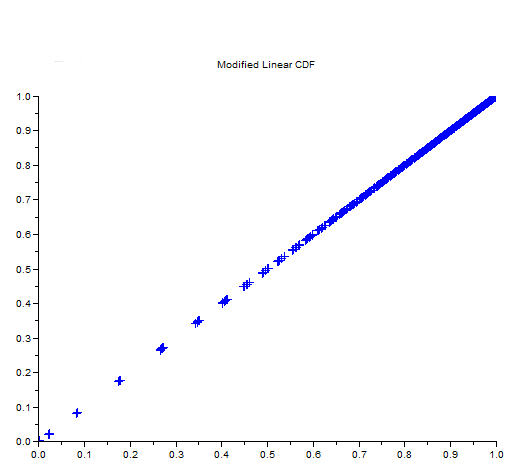

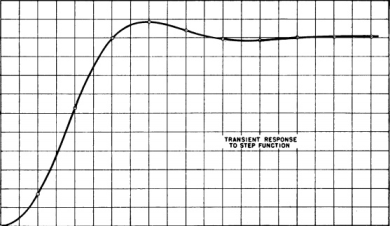

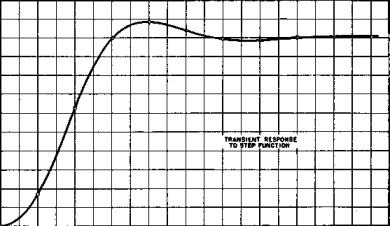

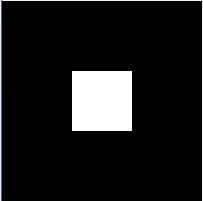

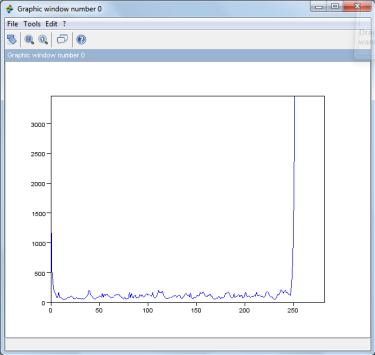

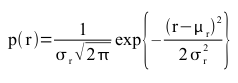

In converting the image to black and white, the goal is also to separate the foreground from the background. A more efficient way of doing this is by obtaining the histogram of gray levels of the grayscale version of the truecolor image. To demonstrate , I used the hand-drawn plot image from the first activity.

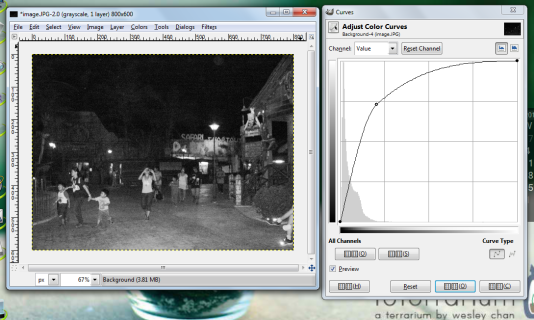

The image was first converted to grayscale using rgb2gray(). If your using SIP toolbox, there’s a straightforward function that simultaneously reads an image and converts it to gray scale: the gray_imread(). But since I’m using the SIVP toolbox and there’s no counterpart function for that, the rgb2gray() will do.

J = imread('C:\Users\user\Documents\AP186\activity 3\p1.jpg');

J1 = rgb2gray(J);

imshow(J1);

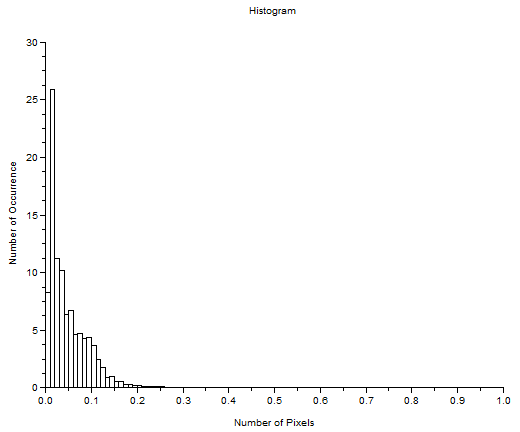

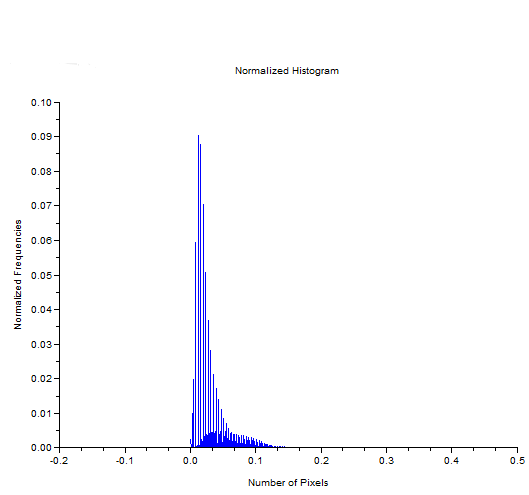

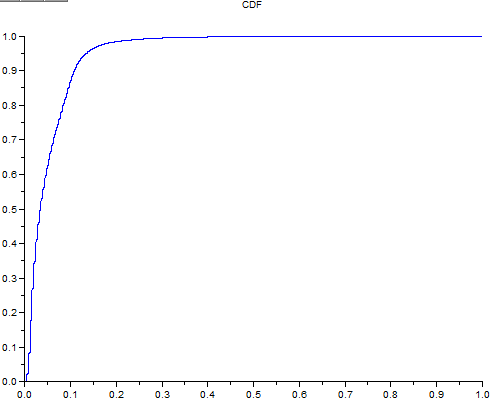

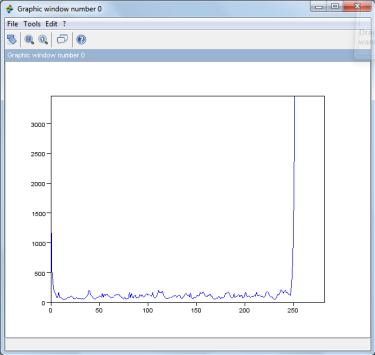

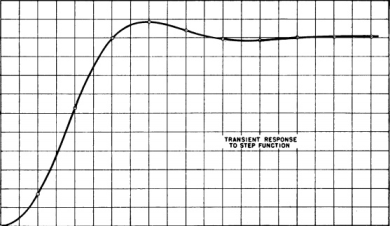

I obtained the histogram of the grayscale image but it was too concentrated in the right part of the graph. But a better plot that shows the gray levels is by plotting the cells vs count as shown in the code below. Zooming in the lower part displays a clearer distribution.

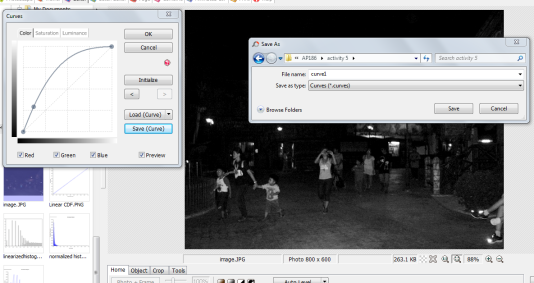

[count, cells]=imhist(J1);

imwrite(J2,'p1_gray_hist.png')

J2 = im2bw(J,0.75);

imshow(J2);

From this, I decided on using on the average a threshold value of 0.75 . And the resulting black and white image is shown below.

Learning and playing with the different types, formats and properties of images is really fun though somewhat exhausting. 🙂

For this activity I give myself a 10. 🙂

References:

binary desc: http://en.wikipedia.org/wiki/Binary_image

binary image: http://xaomi.deviantart.com/art/Line-art-73599773

grayscale desc: http://en.wikipedia.org/wiki/Grayscale

grayscale image: http://www.codeproject.com/Articles/33838/Image-Processing-using-C

indexed image : http://blogs.mathworks.com/steve/2006/02/03/all-about-pixel-colors-part-2/

hdr image: http://www.smashingmagazine.com/2008/03/10/35-fantastic-hdr-pictures/

multi/hyperspectral: http://en.wikipedia.org/wiki/File:MultispectralComparedToHyperspectral.jpg

3d image:

pointcloud:http://www.severnpartnership.com/case_studies/architectural_measured_building_survey_bim/harper_adams_university_campus_buildings

stereopair:http://www.flickr.com/photos/kiwizone/1645102785/sizes/m/in/set-72157602440689327/

mri: http://paulbourke.net/miscellaneous/cortex

image formats: http://www.scantips.com/basics09.html

(1)

(1)